-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

TOTW: Google's Project Ara Modular Phone May Be The Future Of SmartphonesOctober 30, 2014

TOTW: Google's Project Ara Modular Phone May Be The Future Of SmartphonesOctober 30, 2014 -

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

Posts tagged ethics

The Almost Impossible Ethical Dilemma Behind Autonomous Cars

09 years

You’re driving down the road in your Toyota Camry one morning on your way to work. You’ve been driving for 15 years now and pride yourself on the fact that you’ve never had a single accident. And you have to drive a lot, too; every morning you commute an hour up to San Francisco to your office. You pull into a two-lane street lined on both sides with suburban housing, and suddenly realize you took a wrong turn. You quickly look down at your smartphone, which is running Google Maps, to find a new route to the highway. When you look back up, you’re surprised to see a group of 5 people, 3 adults and 2 kids, have unknowingly walked into your path. By the time you or the group notice each other it’s too late to hit the break or for the pedestrians to run out of the way. Your only option to save the 5 people from being injured, or even killed, by your car is to swerve out of the way… right into the path of a woman walking her child in a stroller. You notice all of this in the half a second it takes you to close the distance between you and the group to only 3-4 yards.

You now have but milliseconds to decide what path to take. What do you do? But more to the point of this article, what would an autonomous car do?

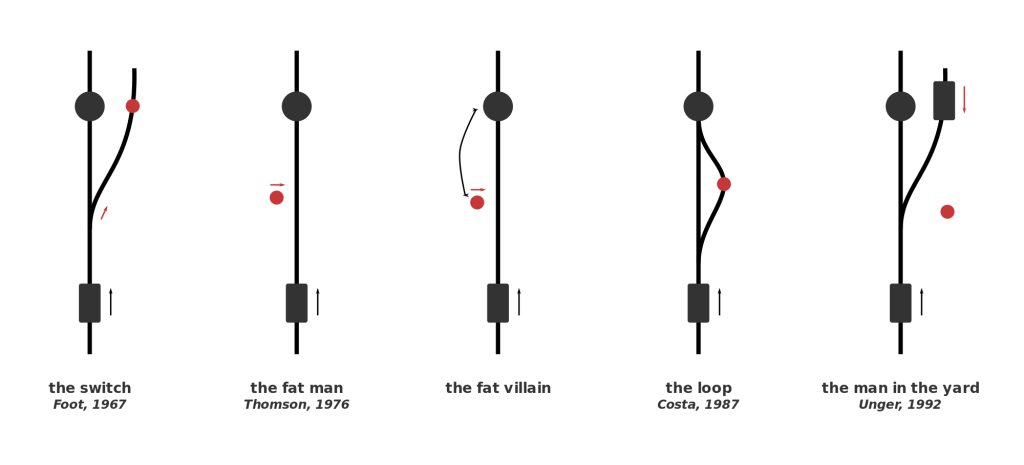

That narrative is a variant of the classic situation known as the Trolley Problem. The Trolley Problem has many variations, some more famous than others, but all of them follow the same general storyline: you must choose between accidentally killing 5 people (e.g., hitting them with your car) or purposefully making an action (e.g., swerving out of the way) that kills one person. This type of situation is obviously one that no one wants to find themselves in, and is so unlikely that most people avoid it their entire life. But in the slim cases where this situation occurs, the split-second decision a human makes will vary from person to person and from situation to situation.

But no matter the outcome of the tragic event, if it does end up happening, the end result will be generally be the fault of a distracted driver. What will happen, though, when this decision is completely in the hands of an algorithm, as it will be when autonomous cars ubiquitously roam the streets years from now. Every new day autonomous cars become more and more something of the present rather than the future, and that leaves many worried. Driving has been ingrained in us for century, and for many, giving that control up to a computer will be frightening. This is despite the fact that in the years that autonomous cars have been on the roads, their safety record has been excellent, with only 14 accidents and no serious injuries. While 14 may seem like a lot, keep in mind that each and every incident was actually the result of human error by another car, many of which were the result of distracted driving.

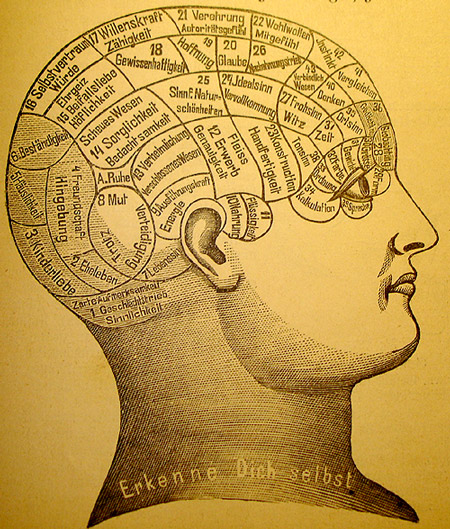

I’d say that people are more worried about situations like the Trolley Problem, rather than the safety of the car itself, when driving in an autonomous car. Autonomous cars are just motorized vehicles driven by algorithms, or intricate math equations that can be written to make decisions. When an algorithm written to make a car change lanes and parallel park has to make almost ethically impossible decisions, choosing between just letting 5 people die or purposely killing 1 person, we can’t really predict what it would do. That’s why autonomous car makers can’t just let this problem go, and have to delve into the realm of philosophy and make an ethics setting in their algorithms.

This won’t be an easy task, and will require everyone, from the car makers to the customers, thinking about what split-second decision they would make, so they can then program the cars to do the same. This ethics setting would have to work in all situations; for instance, what would it do if instead of 5 people versus one person, it was a small child versus hitting an oncoming car? One suggested solution would be to have adjustable ethics setting, where the customer gets to choose whether they would put their own life over a child’s, or to kill one person over letting 5 people die, etc. This would redirect the blame back to the consumer, giving him or her control over such ethical choices. Still, that kind of a decision, which very well could determine fate of you and some random strangers, is one that nobody wants to make. I certainly couldn’t get out of bed and drive to work knowing that a decision I made could kill someone, and I’d bet I’m not alone on that one. In fact, people may even avoid purchasing an autonomous car with an adjustable ethics setting just because they don’t want to make that decision or live with the consequences.

So what do we do? Nobody seems to want to make kind of decisions, even though it is absolutely necessary. Jean-Francois Bonnefon, at the Toulouse School of Economics in France, and his colleagues conducted a study that may help us all with coming up with an acceptable ethics setting. Bonnefon’s logic was that people will be most happy with driving a car that has an ethics setting close to what they believed is a good setting, so he tried to gauge public opinion. By asking several hundred workers at Amazon’s Mechanical Turks artificial intelligence lab a series of questions regarding the Trolley Problem and autonomous cars, he came up with a general public opinion of the dilemma: minimize losses. In all circumstances, choose the option in which the least amount of people are injured or killed; a sort of utilitarian autonomous car, as Bunnefon describes it. But, with continued questioning, Bunnefon came to this conclusion:

“[Participants] were not as confident that autonomous vehicles would be programmed that way in reality—and for a good reason: they actually wished others to cruise in utilitarian autonomous vehicles, more than they wanted to buy utilitarian autonomous vehicles themselves.”

Essentially, people would like other people to drive these utilitarian cars, but less enthusiastic about driving one themselves. Logically, this is a sensible conclusion. We all know that we should make the right decision and sacrifice your life over that of someone younger, like a child, or a group of 3 or 4 people, but when it comes down to it only the bravest among us are willing to do so. While these scenarios are far and few between, the decisions made by the algorithm in that sliver or a second could be the difference between the death of an unlucky passenger or an even more unlucky passerby. This “ethics setting” dilemma is a problem that can’t just be delegated to the engineers at Tesla or Google or BMW; it has to be one that we all think about, and make a collective decision for that will hopefully make the future of transportation a little more morally bearable.