-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

TOTW: Google's Project Ara Modular Phone May Be The Future Of SmartphonesOctober 30, 2014

TOTW: Google's Project Ara Modular Phone May Be The Future Of SmartphonesOctober 30, 2014 -

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

TOTWs

New Canon Camera Has An ISO Of Over 4 Million!

09 years

Capturing moments of our lives has always been an important element of human culture. Before modern technologies existed, people told stories, then later learned to write those stories down. When cameras were invented, people suddenly had the opportunity to take snapshots of their life, whether spontaneous or artistic, that they could later admire. Nowadays, our phones enable us to easily combine still photos with video, yet there has always been one constraint to sharing and capturing that only storytelling isn’t affected by: the time on day, i.e., how much light there is at the time of day. Photos can have perfect composition but be ruined by bad lighting. On the other hand, lighting can be artistically manipulated to create different effects that can actually enhance the look (e.g., with filters or digital adjustments).

In photography, there is a technical measure of how much light you are letting into your camera aperture. Or in other words, the amount of sensitivity to bright or dim light the camera is set to when taking a picture. This measure is called the ISO, pronounced “i-sow”, and it is something that even film for early cameras had the ability to adjust. You could buy ISO 100 film for sunny photos, ISO 200 films for cloudy photos, and ISO 400 film for indoor shots. The higher the ISO, the more sensitive the camera is to lower brightness light. The same rules apply to video. Although older cameras only went up to an ISO of 400, nowadays more expensive cameras go into the thousands. Just recently, Canon released a camera that has the potential to rock the photography/videography world; not for it’s quality of photos and videos, although that is excellent too, but for it’s ISO, able to be set all the way to 4 million.

The video below is about the CMOS sensor, which has been upgraded slightly over the past two years, but you can still see the incredible video quality.

You may be wondering what that even means. If an ISO of 400 is good for taking photos inside, and ISOs into the thousands are good for even darker lighting, what does and ISO of 4 million, that’s 10,000 times more sensitive that what’s needed for inside lighting, does? Well, it turns out that setting your camera to an ISO of 4 million allows you to literally shoot in the dark, effectively giving your camera night vision. Not infrared night vision where the picture looks like a color inverted iPhone, but real night vision, meaning you can film during the night and the video or image will look exactly the same as if you were shooting the day.

This technology was invented by Canon back in 2013 with their CMOS sensor, which just got integrated into Canon’s new camera, the Canon ME20F-SH. The camera is essentially just a cube with a lens, being surprisingly small, only around 4 inches across. It weighs two pounds, which is fairly heavy for a camera, but still allows the device to be used in a wide variety of situations and doesn’t inhibit its portability. Even though bringing the ISO up on regular cameras makes the video quality worse, the ME20F-SH still shoots at HD quality, allowing serious film-makers to use this camera for professional films.

Specs aside, this camera opens up a whole new world of possibilities for film-makers. From cave explorers to experimental directors, this camera can be used for an incredible variety of ways simply for that fact that it can see in the dark. Now, the camera isn’t for amateur photographers or directors who simply want to get a clear night sky shot, as after all, the expected price of the camera is $30,000. But, for people who do have the ideas and also have the money, this camera may totally change the way they film. For the first time in the history of capture-based art and storytelling, light isn’t an obstacle.

Augmented Vs. Virtual Part 2 – Augmented Reality

09 years

Reality is very personalized, it is how we perceive the world around us, and it shapes our existence. And while individual experiences vary widely, for as long as humans have existed, the nature of our realities have been broadly relatable from person to person. My reality is, for the most part, at least explainable in terms of your reality. Yet as technology grows better and more widespread, we are coming closer to an era where my reality, at least for a period of time, may be completely inexplicable in the terms of your reality. There are two main ways to do this: virtual reality and augmented reality. In virtual reality, technology immerses you in a different, separate world. My earlier article on VR was the first of this two-part series, and can be found HERE.

Whereas virtual reality aims to totally replace our reality in a new vision, augmented reality does what the name suggests: it augments, changes, or adds on to our current, natural reality. This can be done in a wide variety of ways, the most popular currently being a close-to-eye translucent screen with projected graphics on top of what you are seeing. This screen can take up your whole field of view, or just in the corner of your vision. Usually, the graphics or words displayed on the screen is not completely opaque, since it would then be blocking your view of your real reality. Augmented reality is intrinsically designed to work in tandem with your current reality, while VR dispenses it in favor of a new one.

With this more conservative approach, augmented reality (AR) likely has greater near-term potential. For VR, creating a new world to inhabit limits many of your possibilities to the realm of entertainment and education. AR, however, has a practically unlimited range of use cases, from gaming to IT to cooking to, well, pretty much any activity. Augmented reality is not limited to, but for now works best as a portable heads-up display, a display that shows helpful situational information. For instance, there was a demo at Epson’s booth at Augmented World Expo 2015 where you got to experience a driving assistance app for AR. In my opinion, the hardware held back the software in that case, as the small field of view was distracting and the glasses were bulky, but you could tell the idea has some potential. At AWE, industrial use cases as well as consumer use cases were also prominently displayed, which included instructional IT assistance, such as remotely assisted repair (e.g., in a power plant, using remote visuals and audio to help fix a broken part).

Before I go on, I have to mention one product: Google Glass. No AR article is complete without mentioning the Google product, the first AR product to make a splash in the popular media. Yet not long after Google Glass was released, it started faded out of the public’s eye. Obvious reasons included the high price, the very odd look, and the social novelty: people couldn’t think of ways they would use it. Plus, with the many legal and trust issues that went along with using the device, it often just didn’t seem worth the trouble. Yet rumor has it that Google is working on a new, upgraded version of the device, and it may make a comeback, but in my opinion it’s too socially intrusive and new to gain significant near-term social traction.

Although many new AR headsets are in the works (most importantly Microsoft’s HoloLens), the development pace is lagging VR, which is already to the stage where developers are focused on enhancing current design models, as I discussed in the previous VR article. For AR, the situation is slightly different. Hardware developers still have to figure out how to create a cheap AR headset, but a headset that also has a full field of view, is relatively small, doesn’t obstruct your view when not in use, and other complications like that. In other words, the hardware of AR occasionally interrupts the consumption of AR content, while for VR hardware, the technology is well on its way to overcoming that particular obstacle.

Beyond these near-term obstacles, if we want to get really speculative, there could be a time when VR will surpass AR even in pure utility. This could occur when we are able to create a whole world, or many worlds, to be experienced in VR, and we decide that we like these worlds better. When the immersion becomes advanced enough to pass for reality, that’s when we will abandon AR, or at least over time use it less and less. Science fiction has pondered this idea, and from what I’ve read, most stories go along the lines of people just spending most of their time in the virtual world and sidelining reality. The possibilities are endless in a world made completely from the fabric of our imagination, whereas in our current reality we have a lot of restrictions to what we can do and achieve. Most likely, this will be in a long, long time, so we have nothing to worry about for now.

Altogether, augmented reality and virtual reality both are innovative and exciting technologies and that have tremendous potential to be useful. On one side, AR will be most likely used more than VR in the coming years for practical purposes, since it’s grounded in reality. On the other hand, VR will be mostly used for entertainment, until we hit a situation like what I mentioned above. It’s hard to pit these two technologies against each other, since they both have their pros and cons, and it really just depends on which tech sounds most exciting to you. Nonetheless, both AR and VR are worth lots of attention and hype, as they will both surely change our world forever, for better or worse.

Augmented vs. Virtual Part 1 – Virtual Reality

19 years

Technologically enhanced vision has been with us for many hundreds of years, with eyeglasses having been in use since at least the 14th century. Without effective sight, living has of course remained possible during this era, but it is a meaningful disadvantage. Now, new technologies are offering the promise to not only make our lives easier, but to also give us new capabilities that we never thought possible.

This idea, enhancing our vision using technology, encompasses a range of technologies, including the two promising arena of augmented reality (AR) and virtual reality (VR). The names are fairly self-explanatory; augmented reality supplements and enhances your visual reality, while virtual reality by contrast creates a whole new reality that you can explore independently of the physical world. Technically, AR hardware generally consists of a pair of glasses, or see-through panes of glass attached to hardware, which runs software that projects translucent content onto the glass in front of you. VR, on the other hand, is almost always a shoebox/goggle-like headset, with two lenses allowing two different screens in front of your eyes to blend into one, using head motion-tracking to make you feel like you are in the virtual world. Both are very cool to experience, as I experienced while attending the Augmented World Expo last week in Silicon Valley, where I have able to demo a host of AR and VR products. This article focuses on my experiences with Virtual Reality gear; next week I will follow-up with thoughts on Augmented Realty.

Virtual Reality

Virtual reality, when combined with well-calibrated head-tracking technology, allows you to be transported into a whole new world. You can turn your head, look around, and the software responds as if this world is actually around you, mimicking real life. This world can be interactive, or it can be a sit-back-and-relax type experience. Both are equally astounding to experience, as the technology is advanced enough so that you can temporarily leave this world and enter whatever world is being shown on your head mounted display (HMD). I wrote about a great use-case of VR at the AWE Expo recently, which involved being suspended horizontally and strapped into a flight-simulation VR game.

Despite what you might think, the optics no longer seem to be a problem, as the engineers at early leaders including Oculus and Gear VR have designed headsets that don’t bother our eyes during use, a problem that plagued early models. That said, complaints persist about vertigo and eye-strain from long periods of use. Even Brendan Iribe, Oculus CEO, got motion sickness from their first Dev kit. Luckily, but his company and others have been making improvements to the software. Personally, I didn’t get sick the least bit while at the conference.

Uses for VR, among many, tend to fall in one major category thus far: entertainment. Video games are set to be transformed by virtual reality, which promises to bring a new dimension into what could be possible in a gaming experience. First-person shooters and games of that like were already trying to become as real and immersive as possible on a flat screen, but with a 360-degree view around the player, and interactive head-tracking… well, it’s surprising that games like Halo, Destiny and Call Of Duty don’t already have VR adaptations. And games with a more artistic themes and play will also benefit greatly in using VR rather than 2D screens, as adding the ability to look around and feel like you are in the game will surely spark ideas in many developer’s heads. At E3 2015, which took place this week in Los Angeles, many commented that virtual reality was an obvious trend in gaming this year, and excitement was starting to build about VR’s potential in gaming. While hardly a gaming exclusive environment, VR appears to be a promising tool for immersive military training as well. Nothing prepares a soldier or a pilot better for an on the battlefield or in the air situation better than already pseudo-experiencing it. The possibilities for gaming and military training are endless in terms of VR, and it really is exciting to see what developers are coming up with.

One thing that may hold VR back is the hardware. Despite having mitigated the vertigo issues, another hardware complaint has been weight. While the Oculus Dev Kit 2 is a little less than 1 pound, which isn’t much, but can be strenuous when wearing for a long period of time. Still, if we have learned anything from the growth of smartphones it’s that technology marches in one clear direction: smaller, lighter, and faster. And that’s one thing that I believe separates AR and VR: VR is already to the point that the only changes needed to be made will be upgrades to the existing hardware. The pixel density, the graphics speed, the weight, the size. Not to mention that in a few years, many of the major problems with VR will be solved, and this is something that I think separates it from AR.

Whereas all VR has to do is get the hardware right and then integrate head tracking software into their 3D games or movies, AR has a ways to go until has perfected its hardware to the same level as VR has. AR is frankly just harder for the developers. Not only do they have to worry about the pixel density, head-tracking, weight, and size like VR, but they have to worry about depth, the screen transparency, object recognition, 3D mapping, and much more. Currently, there isn’t one big AR player, like Oculus, that small developer teams can use as a platform for their own AR software, and that might also be limiting the growth of the technology. A big player may emerge in the next could years, with candidates including Google Glass and Microsoft’s upcoming AR headset HoloLens leading the race, but for now, AR isn’t really an area where small developing teams can just jump in.

In the grand scheme of things, AR and VR are at similar stages of development. Within a decade or two, these problems will vanish, and the technologies will be face-to-face, the only thing separating them is their inherent utility in particular situations. For VR, it is a technology that was made for entertainment and gaming. The idea of transporting yourself to another world, especially when the tech is fully developed and you can’t tell the difference between VR and real life, is as exciting as it is terrifying. Still, we can’t help but try to create these amazing games and experiences, as they very well may expand humanity into virtual world we never could have dreamed of. As developers start meddling with the technology, and consumers start buying units, VR will grow into many more markets, but for now, entertainment, gaming, and military training are the main uses. It really is a technology out of the future, and I can’t wait to see what amazing experiences and tools that VR will bring to the world next.

This is the first piece in a two-part series on AR vs. VR. Check back here soon for the second article!

The James Webb Space Telescope – An Astronomer’s Dream

09 years

Astronomy is all about looking up at the stars. Trying to figure out how the universe works and where our place as humans on Earth is in that giant universe. Where geneticists and particle physicists work on the smallest scales, astronomers work on the largest physical scales: the firmament. For a long time, the naked eye and then simple telescopes were enough to make productive observations, but, science has reached a point in astronomical development where we need ever better equipment to realize new discoveries. The bigger, more expensive, and technically advanced the telescope, the better. And sending it into space is even better, to render the best images and readings.

That seems like a big ask, and it is. The Hubble Space Telescope made its way into popular culture history as the first scientific telescope the public actually knew and cared about. Well, in 2018, a new telescope will be launched that is even greater than the legendary Hubble. It’s called the James Webb Space Telescope (JWST), and it’s pretty much an astronomer’s dream.

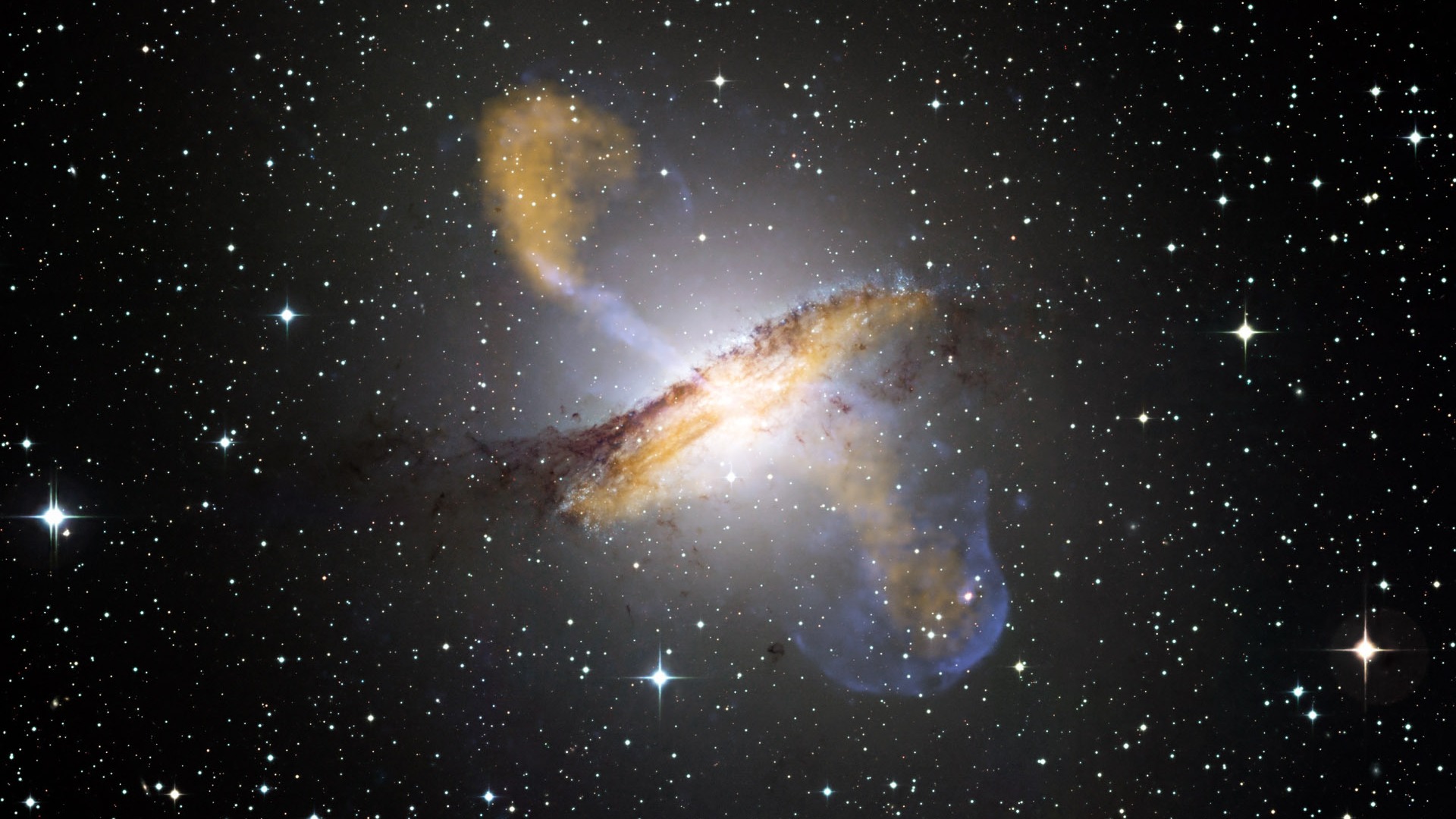

“Why an astronomer’s dream?”, you may be asking. The answer is fairly straightforward: the JWST is a gigantic, high-tech, multi-purpose instrument. To put it in perspective, the Hubble telescope had a mirror, essential in capturing astronomical images, of 8 feet across. The 8-foot mirror produced images like this:

Now consider the JWST. It is planned to have a mirror of a whopping 21 feet and 4 inches in diameter. Made up of 16 smaller, hexagonal mirrors, the incredible size of the James Webb Space Telescope is only one of the many parts of the telescope that is making astronomy nerds all over the world very excited. Being is a multi-purpose telescope, the JWST has much to offer scientists. Below I describe only a handful of JWST’s most prominent features, abilities, and facts:

Infrared Radiation Detection

The James Webb Space Telescope detects infrared wavelengths of light, rather than visible spectrum. If you’re not an astronomy nerd, you may wonder why this difference is significant. Well, infrared is close enough to the visible range so that telescopes can use the light to create an image our eyes can understand, but, it is far enough outside of our visible range to distort the colors, and also have some key unique qualities. For instance, unlike visible light, infrared light isn’t impeded by interstellar dust and gas. This means that the JWST will have largely unobstructed views of what were previously clouded interstellar nurseries; where stars form. Hubble couldn’t peer effectively into these nurseries due to their surrounding gas and dust, but the JWST can. This will give astronomers a look into the formation of stars, which is still shrouded in mystery.

Not only that, but infrared radiation emanates from cooler objects: you have to be as hot as fire to give off significant amounts of visible light, and the Earth is obviously not, but everything from a tree to you emits infrared light, which is precisely how night vision goggles function. More importantly planets emit infrared radiation, but stars don’t as they are too hot and radiate visible (and shorter wavelength) light. That means that, for the first time, we may be able to take photos of exoplanets themselves. Before, with Hubble, stars far outshone even the biggest of planets, by factors of 100 -1000 times. Since suns emit much less infrared radiation, we will be able to focus on the planets themselves, and may even get to take images of the first planets outside our own solar system. Pretty exciting, even for non-astronomy nerds.

Lagrange Point

So, where will this telescope be orbiting? Technically, it’s orbiting the sun, but the JWST will reside at a Lagrange Point in our solar system, which is a very cool astrophysical place where, and this is an oversimplification, the gravity of the sun and the earth balance out so that could be thought of as not orbiting anything at all, but rather just floating still in space. The gravity of our planet and the sun are in balance at Lagrange points, enabling the telescope to have a perfect, unmoving view of the stars. There are three such Lagrange Points on the Earth-Sun axis: L1, directly between the Sun and Earth; L2, on the other side of the Earth away from the sun; and L3, on the other side of the sun entirely. JWST will reside at L2.

This, of course, has upsides and downsides. First of all, being in the Lagrange Point means that it is more than 1 million miles away from Earth, i.e., we will have no way of fixing it if anything happens to it. And, as Hank Green reminds us in the video above, we have had to fix the Hubble a bunch of times, and that just won’t be possible with the JWST. Basically, we better get it right the first time. Also, the JWTS is so massive that it can’t fit into a rocket fully-assembled, so NASA engineers have had to design a complex unfolding system that could go wrong at any moment.

It Can See 13.4 Billion Years Into The Past

Yup, you read that right. Hubble could look far into the past, but not nearly as far as the JWST. Given the time light takes to reach our Earthbound eyes, were always seeing the universe as it existed in the past. As a result, the farther away you focus your telescope, the closer to the Big Bang you are able to see. Whereas the Hubble Ultra-Deep Fiild could look 7-10 billion years into the past, the James Webb Space Telescope, with its much larger mirror, can peer fully 13.6 billion years into the past, almost reaching the point of “first light.” First light was the time after the Big Bang when the universe cooled to a point where the very first galaxies could form and the energies begin to radiate light: the “First Light”. With the JWST, we are literally seeing all the way back in time to the beginning of the universe. There’s no doubt that this will allow astronomers and cosmologists to answer many previously unanswerable questions about how the universe formed. If this doesn’t make you excited for the launch of the telescope in 2018, nothing will.

Now that you’ve heard all that, can you possibly not be counting down the days to the launch three years from now? To recap: the JWST can take pictures of planets outside our solar system, see stars being born, and see the first galaxies in the entire universe being born. It sounds like something out of a science fiction book, but it’s not. NASA expects to spend $8.7 billion on this telescope, which is a lot, but in my opinion, the investment is far better than spending that amount for a popular instant messaging app, as Facebook recently did. The James Webb Space Telescope is truly an astronomer’s dream, and I can’t wait to see what discoveries are made because of it.

The Void Brings Virtual Reality To Life

09 years

No doubt, virtual reality is a revolutionary technology in the field of gaming, military training, and more. Vision makes up so much of our reality that when it is altered or augmented, we can feel like we are in a totally different world, even if things are occurring in that world that we know can’t really happen (e.g., aliens attacking, cars flying, or dragons breathing fire). This is the power and potential of virtual reality products currently under development such as Project Morpheus and Oculus Rift; they can make you believe that you are living in a fantasy world.

The new post-beta Oculus headsets appear to represent a big step forward in immersive gaming, despite lacking one major ingredient in fully immersive gaming: the actual feeling of running away from an enemy, picking up objects, and jumping over a pit of lava. Although the experience is pretty good only augmenting your vision, that last step toward achieving something I would consider fully immersive is building a gaming system that made you physically feel like you are in the game. And that is exactly what Ken Bretschneider, founder of The Void, is trying to achieve.

“I wanted to jump out of my chair and go run around,” Bretschneider said. “I wanted to be in there, but I felt like I was separated from that world just sitting down playing a game. So I often would stand up and then I couldn’t do anything.” – Ken Bretschneider

Although still conceptual, the Void’s product is aiming to take virtual reality gaming to the next level. The idea behind the company is pretty simple: they will create “Void Entertainment Centers” that will use high-tech virtual reality technology, along with real, physical environments to create the ultimate VR experience. Sounds awesome.

The execution of this idea, however, is very complicated.

There are many technical obstacles to creating a fully immersive VR experience. First of all, you need state-of-the-art tracking systems, not only because it will make the experience more realistic, but also since you don’t want the players running into walls (or each other) because the VR headset lagged slightly or didn’t depict the object in the first place.

Also, the VR headset itself better be up to par, otherwise the whole experience itself isn’t worth it. According to The Void’s website, their “Rapture HMD” (head-mounted display) is as good as if not better than other VR headsets such as the recently announced Oculus Rift set for release later this year. With a screen resolution of 1080p for each eye, head-tracking sensors that are accurate to sub-millimeter precision, a mic for in-game communication, and high-quality built-in THX microphones, the Rapture HMD isn’t lacking in impressive specs. Whether it is ultimately good enough to feign reality, though, is a question that will only be answered when the headsets go into production and become part of The Void’s immersive experience.

The Void gear includes not only the HMD but also a set of special tracking gloves — to make your in-game hands as real as possible — and a high-tech vest to provide haptic feedback in response to virtual stimuli. But the technology alone does not suffice, as that is replicable outside of The Void. What would make the experience unique is the physical environment around the players and built into The Void’s Entertainment Centers. In each game center, the first of which is planned to be built in Pleasant Grove, Utah, there will be an array of different stages prepared for players to experience a variety of virtual games. In every “Game Pod”, there are features that make playing there more immersive, such as objects you can pick up and use during the game, elevation change in the platforms, and even technologies that simulate temperature changes, moisture, air pressure, vibrations, smells, and more. All of these mental stimuli outside of the game will be designed to trick your brain into thinking it’s in the game, and that’s pretty much exactly the experience The Void is trying to provide.

Overall, The Void is a big step towards a new age in gaming. For as long as gaming has been around, the actual stimuli coming from the game has been purely vision and hearing based; now, incorporating real objects, physical surroundings, and the environment-based technologies mentioned above, we are nearing a complete immersive experience (a la Star Trek’s holodeck). Science fiction writers have long pondered virtual systems that realistically simulate other worlds, and The Void is potentially one step closer to that ideal. Whether or not we are heading rapidly in that sci-fi direction, for now the Void’s Entertainment Centers would certainly be a lot of fun.

The Simulation Argument Part #2 – The Hypothesis Explained

09 years

This is the second article in a two-part FFtech series on The Simulation Argument. If you haven’t already read the first article go HERE before reading the following.

In the previous FFtech article on the Simulation Argument, we established that Bostrom’s statement that the first proposition is false is a reasonable assumption. Just to remind you, these are the propositions, and one has to be true:

#1. Civilizations inevitably go extinct before reaching “technological maturity,” the time at which civilization can create a simulation complex enough to simulate conscious human beings. Meaning: no simulations.

#2. Civilizations can reach technological maturity, but those who do have no interest in creating a simulation that houses a world full of conscious humans. Meaning: no simulations. Not even one.

#3. We are almost certainly living in a simulation.

Now, on to the second postulate. Since that we have decided that there are quite likely alien civilizations in the universe that have developed an ability to create “ancestor simulations”, as Bostrom likes to call them, the second postulate says that the alien civilizations just have to interest in creating a simulation of fully conscious human beings. Most likely, a civilization creating a simulation of how humans lived before they reached “technological maturity” will be future humans, as it is less likely that we will have met an alien civilization before develop the capacity to create an ancestor simulation of our own, as the distance from another habitable stars is simply too far away.

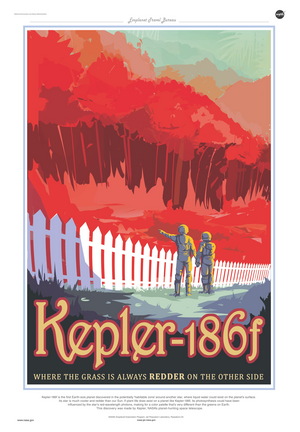

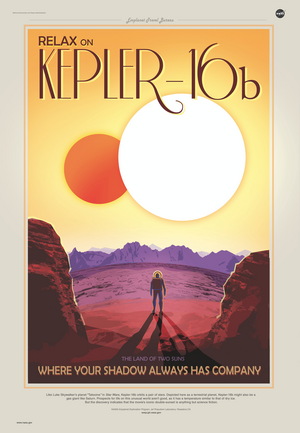

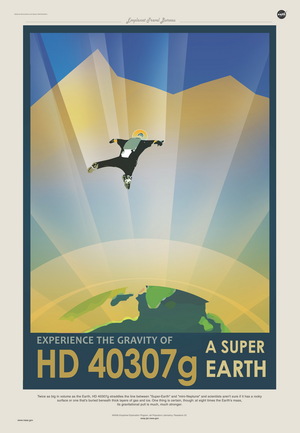

(ABOVE: Some very cool illustrations of hypothetical travel ads for habitable planets found with the Kepler satellite)

However the simulation is created, it seems much more likely that at least one alien starts a simulation. If current trends continue, such as the a definitive interest in our ancestors shown in the multitude of historical studies, there will be plenty of people who would like to simulate how their ancestors lived. I know that I would find a simulation of a Greek town fascinating, for example. The idea that not a single person would want to create a simulation seems unlikely, and so therefore the second postulate is likely false.

So, Bostrom’s first postulate is probably false, and the second postulate is just plain unlikely by human (and, more arguably, alien) nature. Based off of that, do we now live in a simulation? Well, not yet. Just because and ancestor simulation exists doesn’t mean that you’re living in it. First, you have to consider the virtual “birth rate” of these simulations. Bostrom also supposes that it takes a lot more effort and time to create a real human than it would a virtual one once a sufficiently advanced technology is developed. Therefore, the ancestor simulation (or simulations) could have many orders of magnitude more virtual humans living inside it then are actual humans, living outside the computer and controlling the simulation. So, if there are thirty virtual humans for every real human, or even numbers up to 1,000 virtual humans to every human, that means the probability that you are one of the few “real” humans rather than a simulated human is very low.

And that, my friends, is the simulation hypothesis.

Of course, there are many assumptions made here, some clear and others subtle, some of which could be used to attack Bostrom’s argument. For instance, one of the major assumptions that Bostrom makes is what he calls “Substrate Independence”. Substrate Independence is the idea that a working, conscious brain can, as he writes in his original paper,

” …supervene on any of a broad class of physical substrates. Provided a system implements the right sort of computational structures and processes, it can be associated with conscious experiences. It is not an essential property of consciousness that it is implemented on carbon-based biological neural networks inside a cranium: silicon-based processors inside a computer could in principle do the trick as well.

Basically, Substrate Independence is the idea that consciousness can take many forms, only one of which is carbon-based biological neural networks. This form of Substrate Independence is pretty hard grasp, which is why Bostrom argues that the full form of Substrate Independence isn’t actually needed for an ancestor simulation. Really, the only form of Substrate Independence needed to create an ancestor simulation is a computer program running well enough to pass the Turing Test with flying colors.

Besides Substrate Independence, most of the rest of the Simulation Argument is fairly simple. Since we have already deduced that there is likely to be at least one ancestor simulation in existence, the likelihood that we are living in that simulation is pretty high. The logic behind this is that the ancestor simulation doesn’t have a set birth rate that can’t be manipulated. The simulation could have as many simulated people in it as they want – though this is stated as obvious, when it is also debatable — and there would be many orders of magnitude more simulated people than actual human people not in a simulation, and even more if there are multiple simulations running at the same time. The present-day parallel is to online video games MMORPGs, which are constantly getting bigger and bigger, with many more characters made in those games than real humans born every second.

Obviously, this argument is very speculative. Substrate Independence, ancestor simulations, the whole thing; it just all seems too far-fetched to be true. And, after all, Bostrom isn’t a computer scientist, he’s works at the Faculty of Philosophy in Oxford. But, that doesn’t mean his argument is false by nature, as in his original paper he goes into incredible detail about computing power, Substrate Independence, and even creates a mathematical formula for calculating the probability we live in a simulation. In fact, if we want to get technical, Bostrom categorizes what I have told you so far as the Simulation Hypothesis, and the full Simulation Argument being the probability equation Bostrom created, some “empirical” facts, and relation to unrefutable philosophical principles. If you want to read Bostrom brilliant albeit a little wordy paper, click HERE.

To sum it up: a man named Nick Bostrom created a series of logical “propositions” that, when examined closely, seem to suggest that there is a very high probability that we are living in a simulation. In fact, the probability is so high that to close his paper, Bostrom writes:

“Unless we are now living in a simulation, our descendants will almost certainly never run an ancestor simulation.”

You may take knowing this however you want. Personally, I think the simulation argument is one of the coolest things to ever come out of philosophy. And if it’s true, that we live in a simulation, that only makes it cooler. After all, we will never know for sure whether we live in a simulation or not, and either way, it doesn’t affect your life the slightest. You have no choice but to continue living your life as you did, maybe in a simulation, maybe not. All this shows is that as technology continues to develop at a rapid pace, we are getting closer and closer to even the wildest of science fiction technologies to become a reality.

Sources: https://www.youtube.com/watch?v=nnl6nY8YKHs http://www.simulation-argument.com/simulation.htmlThe Simulation Argument Part #1 – The First Proposition

09 years

This is the first article in FFtech’s series on The Simulation Argument. Enjoy, and check back for the following articles in the upcoming weeks.

*Warning – the following is incredibly speculative.*

Life seems real, right? This sounds like an obvious statement – of course life is real. That’s what life is. We are living, breathing humans, going about our daily lives, playing our part in the grand theater of life.

Or so we think.

Ok ok, I’ll stop being dramatic. This isn’t what you think; I’m not going to tell you that you’re a reincarnation of a turtle, or that you’re a ghost or spirit. But what I’m going to propose may seem even more preposterous. Brace for it. Ready? Using logical steps, some are arguing that they can prove the likelihood that we are living purely in a simulation is very high.

Whaaaat?? How could we possibly be living in a simulation, with just a bunch of code constituting our entire existence? This idea, famously popularized in the film The Matrix, now has a rigorous scientific argument, created by Nick Bostrom. For Bostrom however, instead of humans being controlled by aliens in a sci-fi thriller, the Simulation Argument conducts a sequence of logical steps in an effort to demonstrate that there is a greater chance than we might think that we are living in a simulation. The argument Bostom put together has three propositions, one of which must be true:

#1. Civilizations inevitably go extinct before reaching “technological maturity,” the time at which civilization can create a simulation complex enough to simulate conscious human beings. Meaning: no simulations.

#2. Civilizations can reach technological maturity, but those who do have no interest in creating a simulation that houses a world full of conscious humans. Meaning: no simulations. Not even one.

#3. We are almost certainly living in a simulation.

Hold it right there, you may be saying. It’s obvious that the first one it true, meaning we couldn’t be able to be in a simulation. That statement, that the first postulate is false, seems logical, but when put through a bunch of philosophical hurdles, doesn’t hold water. The reason that #1 is probably wrong is that the likelihood of sophisticated alien civilizations existing is actually quite high. Although beyond the scope of this article, the vast number of habitable planets, including planets outside of the Habitable Zone but that still may harbor life, is extremely large. Somewhere in the universe, intelligent life must have evolved. Once we have accepted that conclusion, it becomes less likely that this stage of development is unreachable by any one of these civilizations. From an evolutionary perspective, we as humans on Earth are arguably not too far away from being able to simulate a full chemical human mind, and within say 100-1,000 years we may have developed such a simulation similar to the one Bostom is hypothesizing.

One of the main principles of science, as the great Carl Sagan says in his quote The Pale Blue Dot, is the fact that humans on Earth aren’t special, chosen to be the singular life form in the universe. The Milky Way isn’t special at all – quite ordinary among galaxies in fact. If every other alien civilization becomes extinct within 100-1,000 years of our level of technological development, it would certainly make us one very special species. It seems much more likely that at least one civilization, whether it is future humans ourselves or an alien species, develops to this advanced level of sophistication. Thus after some considerable mental wrestling, the first postulate is deemed by Bostom to be most likely false.

But, as even Bostom himself admitted, we don’t have fully sufficient evidence against any of the first two arguments to completely rule them out. There are plenty of theories that favor a hypothetical “Great Sieve”, some event that will happen to every advanced civilization in the universe that drives them to extinction, and that will do the same to us once we reach that stage. Maybe it will be a technology that, once discovered, causes every civilization to ultimately destroy themselves. (e.g., genetic manipulation, nuclear power and weapons, bio-engineering diseases, etc.) The Great Sieve has also been used as an explanation for why there haven’t already detected some type of alien life forms, but the jury is still out on that one. Whatever the Great Sieve may actually be, we still don’t have our complete confidence in saying that the first postulate is 100% wrong. But, in the absence of any conclusive argument for a “Great Sieve”, we will for now follow along with Bostom and say the first postulate should be false.

This is the first big step in the Simulation Argument. The rest of the argument is built upon the idea that alien civilizations could actually develop and use ancestor simulations. This isn’t a small step to make, and it draws in many other philosophical complications, for instance, is creating a conscious computer program as easy as replicating a human brain in code, or is there more to it? I’ll get into this and more in the next installment of FFtech’s Simulation Argument series, so check back soon.

Sources: https://www.youtube.com/watch?v=nnl6nY8YKHs http://www.simulation-argument.com/simulation.html

Are All Animals Doomed For Extinction? Part 4 – Is There Hope?

09 years

This is the final article in FFtech’s De-extinction and Conservation Tech series. To read the first article, go HERE, to read the second article, go HERE, and you can find our most recent article HERE.

So, is there hope for the animals of Earth? That’s the million dollar question. Are an ever-growing number of species ultimately doomed to extinction? While this question may be impossible to answer yet, it is certainly an outcome we all hope to avoid.

In the present, we can do some things to slow the biggest contributors to species extinction such as deforestation and poaching. Efforts are underway in battlegrounds such as the Amazon rainforest to lower the heartless logging of the forests. So much forest is cut down that every second, roughly 36 football fields of trees are destroyed, homes for countless animals, plants, insects, fungi and more amazing life. Also, many efforts to stop poaching of rhinos and elephants in Africa and Asia are taking place, targeting not only the poachers themselves but also the incredibly damaging market for their tusks and horns in China and other countries, leveraging the star-power of local celebrities to amplify the message. Tons of others are chipping in, and yet the trend is still not significantly changing.v

Who am I to say that we’re doomed, though? People have miraculously came together to do great things before, and I’m sure they can do that again for causes like these as well. After all, our animals are what differentiates Earth from some other floating rock out in the Milky Way.

But just stopping this one problem won’t be enough. Overpopulation will drive people into the habitats of more animals. Global warming will continue to melt the ice caps, not only destroying the home of many Arctic and Antarctic species, but causing rising sea levels that threaten to drown countless other animals living near coastlines across the world. Not only do the problems directly relating to animals hurt the chances of the general survival of the Earthen fauna, but other, directly human-caused problems do too. If we are going to be saved by some miracle technology that stops global warming, and the global population levels out, so be it. To be honest, I highly doubt that will happen. At least for many of these problems, humanity will have to exercise its altruistic muscles, and see if we can fix what wrongs the rise of homo sapiens has brought upon the natural world. As interesting as I find the fields cosmology and astronomy, at least an equal amount of eyes and money should be spent studying the fragile ecosystems of the great world we live on, rather than already looking forward to abandoning this planet for another one that is probably not as unique and fascinating as the one we already live on.

“Look again at that dot. That’s here. That’s home. That’s us. On it everyone you love, everyone you know, everyone you ever heard of, every human being who ever was, lived out their lives. The aggregate of our joy and suffering, thousands of confident religions, ideologies, and economic doctrines, every hunter and forager, every hero and coward, every creator and destroyer of civilization, every king and peasant, every young couple in love, every mother and father, hopeful child, inventor and explorer, every teacher of morals, every corrupt politician, every “superstar,” every “supreme leader,” every saint and sinner in the history of our species lived there-on a mote of dust suspended in a sunbeam.

The Earth is a very small stage in a vast cosmic arena. Think of the endless cruelties visited by the inhabitants of one corner of this pixel on the scarcely distinguishable inhabitants of some other corner, how frequent their misunderstandings, how eager they are to kill one another, how fervent their hatreds. Think of the rivers of blood spilled by all those generals and emperors so that, in glory and triumph, they could become the momentary masters of a fraction of a dot.

Our posturings, our imagined self-importance, the delusion that we have some privileged position in the Universe, are challenged by this point of pale light. Our planet is a lonely speck in the great enveloping cosmic dark. In our obscurity, in all this vastness, there is no hint that help will come from elsewhere to save us from ourselves.

The Earth is the only world known so far to harbor life. There is nowhere else, at least in the near future, to which our species could migrate. Visit, yes. Settle, not yet. Like it or not, for the moment the Earth is where we make our stand.

It has been said that astronomy is a humbling and character-building experience. There is perhaps no better demonstration of the folly of human conceits than this distant image of our tiny world. To me, it underscores our responsibility to deal more kindly with one another, and to preserve and cherish the pale blue dot, the only home we’ve ever known.”

– Carl Sagan

Thanks for sticking with me through this whole conversational journey! If you want to check out the first, second and third articles in the series, go HERE.

Sources: http://www.biologicaldiversity.org/programs/biodiversity/elements_of_biodiversity/extinction_crisis/ http://wwf.panda.org/about_our_earth/biodiversity/biodiversity/ http://www.nature.com/news/2011/110823/full/news.2011.498.htmlAre All Animals Doomed For Extinction? Part 3 – Noah’s DNA Ark

09 years

This is the third installment in Fast Forward’s De-extinction & Conservation tech series. For the first article, click HERE, and to read the second article, go HERE.

What happens if we can’t stop the demise of a rising share of Earth’s species? What if, in a worst-case scenario, we actually can’t halt the extinctions? While clearly an extreme case scenario, if the conservation techniques discussed in the previous articles in this series fail, this outcome starts to become worthy of contemplation. Most likely, habitat destruction would be the cause of accelerating extinctions, and with fewer habitable ecosystems, utilizing frozen tissue samples (see second article) to relocate populations to new locales may become one of our only options. This is clearly speculative, but as animals all over the world are losing their homes by the day, it may not be as far off as we hope.

An alternative hope could be rapidly developing technologies such as 3D printing. With a strong library of species DNA, we could potentially “3D-print animals” to populate whatever space we find for them. Problems are many, including technical obstacles as well as the lack of adequate DNA samples to restore balanced ecosystems. That’s where, and I’m surprised I have to say this, the Russians come in.

Russia has granted Moscow State University their second biggest scientific grant ever on a project called “Noah’s Ark”, which is essentially a giant databank consisting of DNA from every single living and near-extinct species. That is a heck of a big job, but apparently the Russians are ready to take it head on. “It will enable us to cryogenically freeze and store various cellular materials, which can then reproduce. It will also contain information systems. Not everything needs to be kept in a petri dish,” said MSU rector Viktor Sadivnichy.

The physical building designed to house the DNA library is set to be completed in 2018, with its gigantic size reflecting the magnitude of the task at hand. The university’s incredible task could take decades: there are estimated to be 8.7 million species, with an estimated 86% of land species and 91% of all marine species yet to be discovered. At the current rate of field taxonomy, we would only have discovered every species on Earth in more than 400 years. So even if the scientists can manage to sample a good majority of the species out there that we have found, they will have a long way to go before taking a full backup of Earth’s genetic data.

This is the third installment in the four-part series on De-Extinction & Conservation tech. Check back here soon for the last article in the series!

Sources: http://rt.com/news/217747-noah-ark-russia-biological/ http://www.nature.com/news/2011/110823/full/news.2011.498.html http://www.nydailynews.com/news/world/russia-build-noah-ark-world-dna-databank-article-1.2059704